Guideline Learning for In-Context Information Extraction

Published in EMNLP, 2023

Recommended citation: Chaoxu Pang, Yixuan Cao, Qiang Ding, and Ping Luo. Guideline Learning for In-Context Information Extraction. In EMNLP, 2023. https://aclanthology.org/2023.emnlp-main.950/

Machine learning algorithms typically learn by updating parameters. But in the era of large models, updating parameters becomes infeasible for many closed-source models. Therefore, how can learning be achieved without updating model parameters, thereby enhancing the model’s in-context learning capabilities?

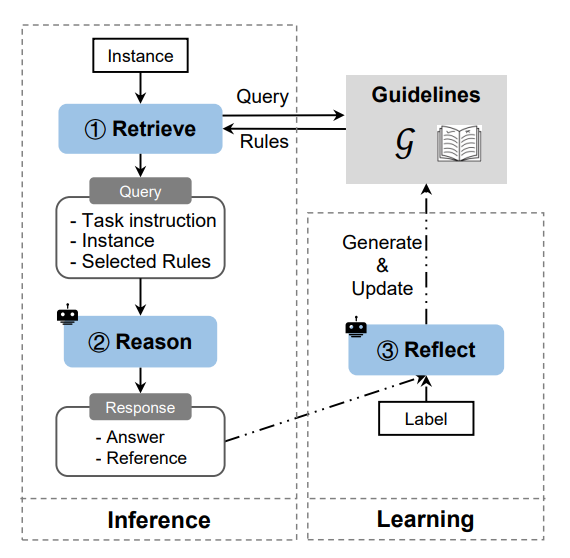

We have proposed a Guideline Learning framework based on in-context learning. A guideline refers to text used to describe tasks, similar to the instructions given to annotators during the labeling process. This framework can generate and follow guidelines, enabling the model to learn a series of task guidelines from error samples without updating its parameters. Experiments in event extraction and relation extraction have shown that Guideline Learning improves the performance of in-context learning in information extraction tasks. This suggests that learning task instructions without updating parameters can serve as a new approach to learning.

On the leftside of the figure, during inference, given an input query, the framework retrieve relevant guideline rules to conduct in-context learning. On the rightside, during training, given an input query, the prediction and the annotation are feed into the LLM to refect and generate (update) relavant guideline rules. The training output is a set of guideline rules.